An AI start-up company just raised 15 million US dollars. The founders all have a good face. Others congratulate and talk about each other with business, but they unexpectedly attracted the ridicule and irony of the Turing Award winner.

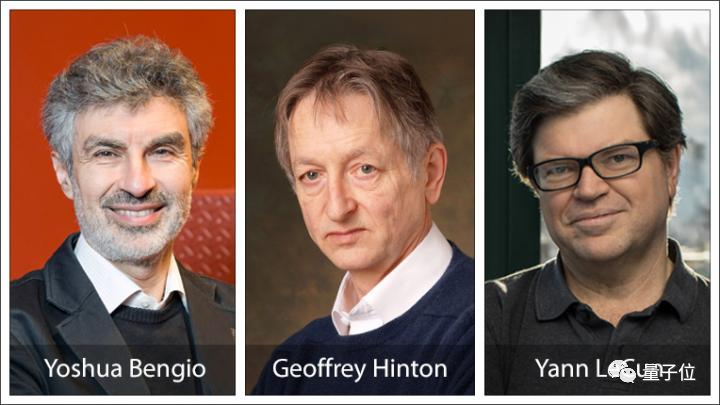

The sarcasm is Yann LeCun, one of the three famous deep learning giants.

And LeCun is not someone else but his New York University colleague Gary Marcus, who is not known for criticizing AI and deep learning.

This Professor Marcus is no stranger to people who pay attention to AI, and even gave him the nickname "Sniff" Marcus.

But this time, when he was the founder and CEO of the AI company's financing rejoicing, he received a rude ridicule from LeCun.

Marcus uses deep learning to finance, LeCun is very mindful.

What is Marcus' startup company doing?

Why did LeCun take the initiative to come to taunt?

Let me introduce the company that Marcus founded.

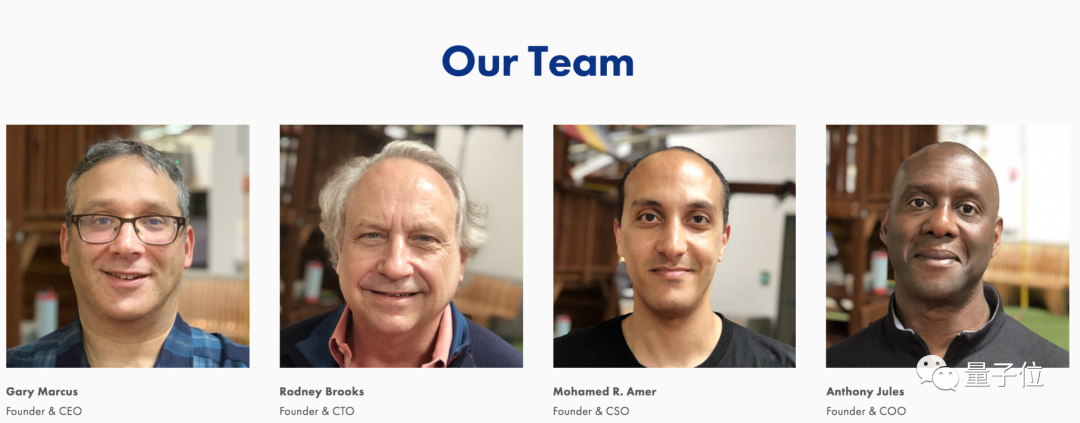

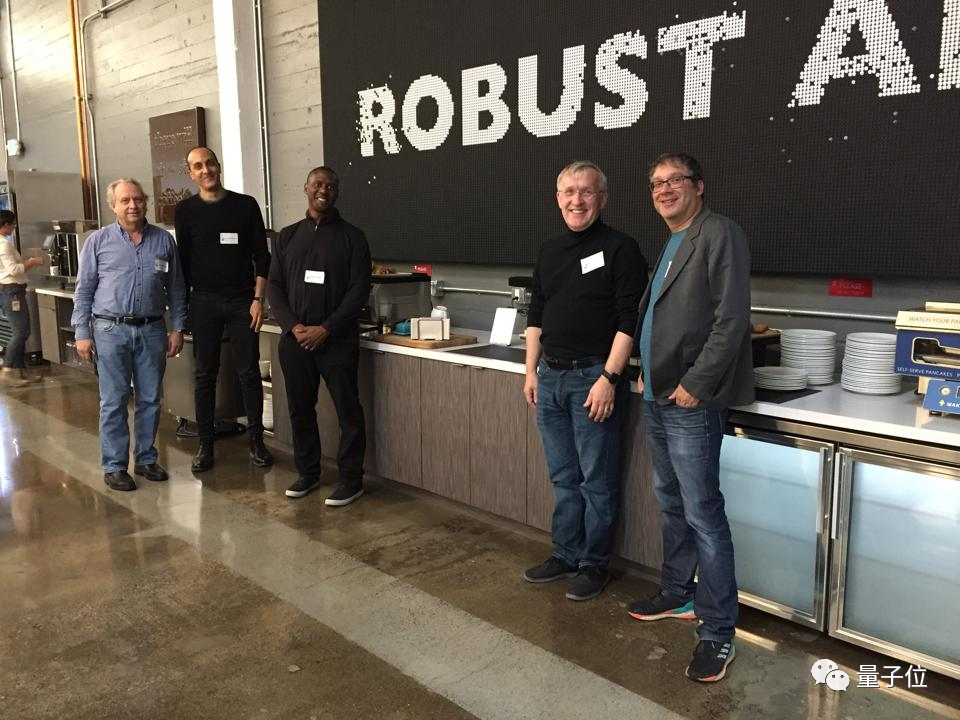

Robust.AI, June 2019, was co-founded by familiar Gary Marcus, MIT professor Rodney Brooks, and several other scientists who study robotics.

Among them, Marcus and Rodney Brooks, as CEO and CTO respectively, are the leaders and core of the new company.

Rodney Brooks is also a bigwig in the field of AI and robotics, and was the director of the MIT Artificial Intelligence Laboratory.

The goal of Robust.AI is to make a set of "robot cognitive engines" that can be used in many industrial scenarios.

In layman's terms, this is a fight made of soft pieces of the system of the company.

What they are doing is the AI algorithm of industrial robots, which can not only meet specific scenarios, but also surpass current products in terms of "cognition" of the environment, so that they can be quickly deployed on different production lines to achieve "universal".

Robust.AI currently has 25 employees and job advertisements have been running. According to Marcus introduced to Forbes magazine, they have already cooperated with a certain customer and expect to deliver the first batch of products in 2021.

Two days ago, foreign media reported on Robust.AI’s latest US$15 million financing. Together with last year’s seed round of US$7.5 million, a total of US$22.5 million has been raised.

All the above information is normal, and there is nothing outrageous.

However, LeCun couldn't stand it.

You Marcus criticized AI for not working, poor deep learning cognitive ability, and opacity, and won fame and attention...

Now the other side uses this to start a company to raise funds to "circulate money"?

It's really interesting .

"True Fragrance" Marcus

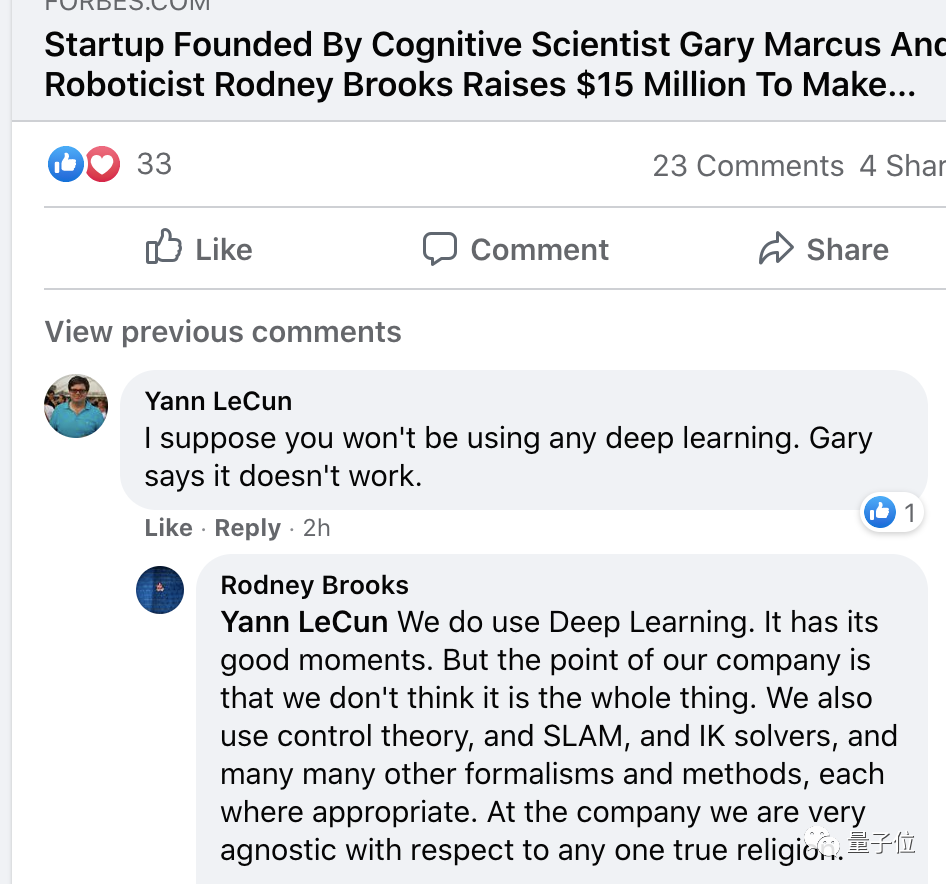

"You shouldn't use deep learning? Gary (referring to Marcus) said that deep learning doesn't work."

Under Robust.AI's financing news, LeCun asked "there is something in the word".

After all, Marcus's previous criticism of deep learning was really sharp and unrelenting, and it was simply anti-deep learning.

However, Marcus was different this time and did not directly challenge.

The one who responded to LeCun in the Facebook conversation was the CTO of Robust.AI and Rodney Brooks, a professor at the MIT Matsushita Robot Research Institute.

Brooks answered honestly: "We did use deep learning techniques."

△ The big guys quarreled under the news comments

But he further explained that this is not all the company's technical solutions.

In addition to deep learning, there are technologies such as control theory, SLAM (simultaneous localization and mapping), IK solver, etc., and there is no stick to any genre.

"Oh, what you are doing is what everyone else is doing."

LeCun continued to make up for the knife-there is no innovation or difference, I thought that the criticism was so strong, maybe it really you can you up.

Of course, if you don’t understand Marcus’s previous AI criticism-the core is deep learning criticism, you may think that the Turing Award winner, how to "be careful"...

But a few years ago, Marcus was really merciless.

Teacher Ma not only spared no effort to criticize deep learning, saying that it is "poor in perception" and "too dependent on data"...

Every time there is a major progress in the AI field, there is almost a rush to pour cold water, such as criticizing GPT-3 some time ago.

Sometimes it even seems to be a bone in the egg, such as GPT-3. Compared with the previous two generations, the effect is not so good.

But Marcus can always criticize the shortcomings of the current version, saying that this AI cannot be used in fields such as medical care, and there are many problems in the long tail scene...To be honest, it does seem to be criticized for criticism.

So now, the company he founded by himself uses deep learning. Although it also solves real problems, it still focuses on financing and burning money...

It can be regarded as inevitable "the law of true fragrance", and he has become his own criticism.

Regarding Yann LeCun

This is probably understandable, why Yann LeCun is so upset.

After all, what Marcus is opposed to is his most important academic achievement. It is also the revival that he and Hinton, Bengio and others have been sitting on for more than ten years. He knows that AI is not easy to usher in.

They naturally know the limitations of deep learning-driven AI, so they have been constantly looking for breakthroughs and opening black boxes...it is the constructive group.

In fact, LeCun did not respond much to Marcus's previous criticism.

But this time, it can be regarded as the best opportunity, and LeCun is "knowingly asking"-in Forbes' report on Robust.AI financing, it has already stated the use of deep learning technology.

LeCun put it plainly, just want to ridicule this behavior of smashing the pot and eating.

Before replying to "Similar to others", LeCun also wrote a long paragraph satirizing Marcus, to the effect:

I'm trying to write an academic monograph, and I'm sure this book is more offensive than anything Marcus said...

Regardless of whether you are engaged in AI, or CS, neuroscience... After reading, you can refresh the major knowledge in this field that you have never heard of...

There will be no progress in these areas for centuries...I look forward to people reading blood pressure rise.

Hahahaha...

LeCun really "keep grudges" and put "neuroscience" in it.

Because Marcus is a professor of psychology and neuroscience-when there was a serious conflict before, some people criticized Marcus for being "unqualified" to criticize things that were not his major.

Another Gary joins the debate

LeCun's irony, Gary Marcus as the victim has never responded...

Instead, another Gary came out to argue with LeCun.

Gary Bradski is also a well-known leader in the field of machine learning. The most widely known work is the OpenCV computer vision software library.

He meant to say:

LeCun, wait a moment, I, Gary, have something to say. I think deep learning is a revolutionary advancement and a practical tool.

Everyone is now using differential methods for model rendering and simulation. This is very important because everyone adds these elements as variables to Pytorch.

A piece of code can be differentiated with a little modification, which makes deep learning using Pytorch progress rapidly.

Deep learning partly proves the feasibility of general artificial intelligence, but the problem is the lack of interpretation and attribution standards for the results, but few people question it.

I wish the company a success! I wish you always have clear and usable gradients.

Gary Bradski is much more euphemistic, acknowledging the importance of deep learning at the moment, but saying that the model cannot explain it.

He uttered the current status and hidden worries of deep learning. This is the crux of Marcus's long-term bombardment of deep learning and his grievances with various bigwigs. It is also the focus of debate on the future development path of artificial intelligence .

What is the "wrong" of deep learning?

Regarding Marcus's criticism of AI, the most representative one is his long article written in 2018, which comprehensively and systematically explained his views on deep learning.

This article can be summarized as the top ten flaws of deep learning.

1. Deep learning is highly dependent on data

Humans rely on clear definitions to learn abstract relationships easily.

However, deep learning does not have the ability to learn abstract concepts through clear definitions of language description, and requires hundreds of millions of data training at every turn.

Geoff Hinton also expressed concerns about the deep learning system's reliance on large amounts of labeled data.

2. Deep learning transfer ability is limited

The "deep" in "deep learning" refers to the technical and architectural nature, that is, many hidden layers are stacked. This kind of "deepness" does not mean that it has a deep understanding of abstract concepts.

Once the task scene changes, you need to find data training again.

3. Deep learning can't handle the hierarchical structure naturally

Most current deep learning-based language models treat sentences as sequences of words.

When encountering an unfamiliar sentence structure, the Recurrent Neural Network (RNN) cannot systematically display and expand the recursive structure of the sentence.

This is because the correlation between the groups of features learned by deep learning is flat.

4. Deep learning can't handle open reasoning

On the Stanford question and answer data set SQuAD, if the answer to the question is contained in the title text, the current machine reading and comprehension system can answer it well, but if it is not in the text, the system performance will be much worse.

In other words, the current system does not have the same reasoning ability as humans.

5. Deep learning is not transparent enough

The "black box" nature of neural networks has always attracted much attention. But this issue of transparency has not been resolved so far.

6. Deep learning has not been combined with prior knowledge

Because of the lack of prior knowledge, it is difficult for deep learning to solve open problems, such as how to repair a bicycle with a rope entangled in the spokes?

Seemingly simple questions involve a large number of different knowledge in the real world, and no data set is suitable for them.

7. Deep learning cannot distinguish between cause and effect and related relationships

Deep learning systems learn the complex correlation between input and output, but cannot learn the causal relationship between them.

8. Deep learning needs to work in a strict and stable environment

The logic of deep learning works best in a highly stable environment. For example, in a game like chess, the rules will not change, but in political and economic life, there is only change.

9. Deep learning is only an approximation

Deep learning performs well in some specific areas, but it is also easy to be fooled.

10. Deep learning is difficult to engineer

Deep learning is difficult to achieve robust engineering, because it is difficult to ensure that the machine learning system works effectively in a brand-new new environment.

These ten "criminals" can be described as cutting-edge. Deep learning does have problems such as mechanical dependence on training, opacity, and fuzzy causality.

However, if Marcus's sharp criticism is limited to academics, there may not be LeCun's brooding.

Deep learning is currently the hottest tool in the AI field.

Marcus bluntly said that the academia's hype about deep learning has been too much and objectively misled people's understanding of the development path of AI.

These issues have become the fundamental starting point for Marcus to bombard deep learning and question the progress of AI.

Over the years, Marcus has repeatedly confronted the bigwigs of the AI industry, including the Big Three in deep learning, Wu Enda and so on.

The defense of the big guys focused on "deep learning is not to define a thing, but to point out a direction."

It doesn't make sense to ask if deep learning can be done. It makes sense to ask how to train it to do it.

Deep learning is a kind of thinking and methodology.

Obviously, AI bigwigs do not want to deny deep learning from the root, otherwise AI will fall into silence and the dark night of history.

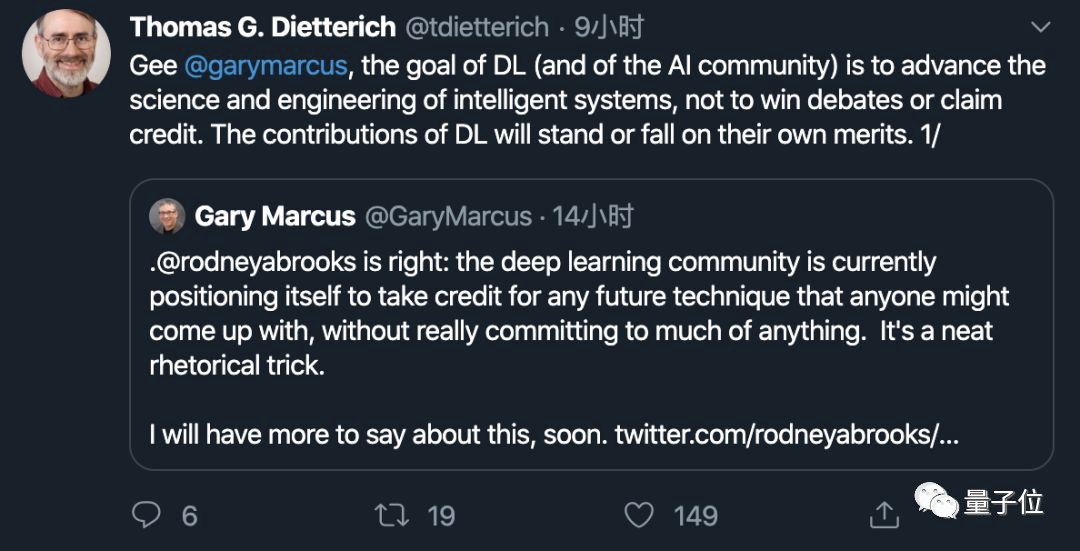

In the face of Marcus’s “qualitative” questioning, only Thomas G. Dietterich, the former chairman of AAAI, responded after a debate:

"The contribution or criticism of deep learning does not come from results."

In addition, Marcus even had a face-to-face debate with LeCun: "Is it necessary for AI to have human-like capabilities".

The essence of this debate is a series of flaws in deep learning.

Marcus believes that such AI will not go far, and LeCun believes that it has great potential and does not need to have brain-like capabilities to meet demand.

Of course, they couldn't convince each other at all.

On the one hand, deep learning is invincible and plays a key role in many AI research. Some more mature technologies have actually been implemented. The capabilities that deep learning has demonstrated cannot be replaced by other methods.

On the other hand, the limitations and defects of deep learning are indeed difficult to crack in a short time.

So obviously, this is a battle between the "revolutionaries" and the "reformers".

The "revolutionaries" represented by Marcus denied all-round and reassessed deep learning and AI...

LeCun and others have finally used deep learning to promote the AI renaissance. Even though they are well aware of the shortcomings of deep learning and the current challenges of AI, they still hope to continue to find countermeasures in the development, rather than waste food due to choking...

It's just that Marcus, the "revolutionary", just overthrows, doesn't care about construction, and now uses the technology he criticized to finance.

LeCun didn't say swear words, he was probably restrained enough.

What do you think?